ROB550: Maebot Lab

Project Goal

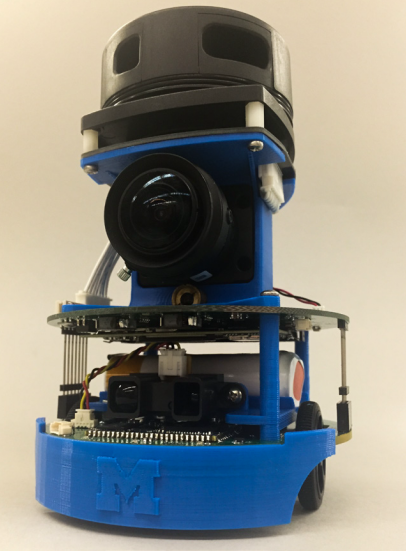

We have to build a mobile ground robot (MaeBot) that can autonomously explore an apriori unknown bounded region, accurately reach specific points of interest (key, treasure) and finally detect an opening in the boundary and escape. Some of the challenges include designing an efficient motion controller that can accurately follow a desired trajectory, a robust Simultaneous Localization and Mapping (SLAM) module to address drift in odometry, a fast motion planning algorithm to compute paths to target destinations and a fail-safe exploration algorithm that can guide the robot to autonomously explore a bounded region completely.

Methods

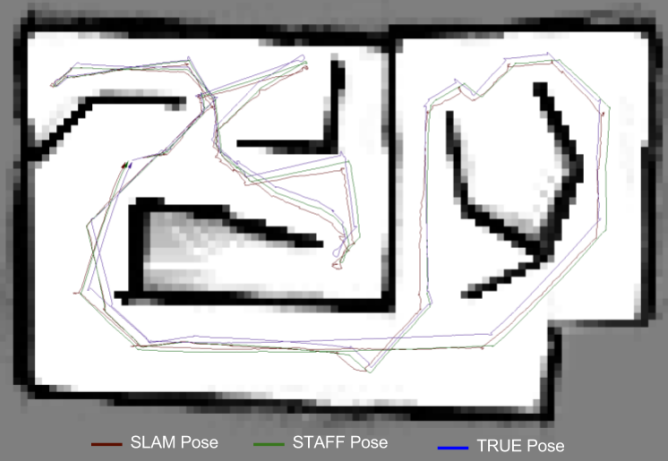

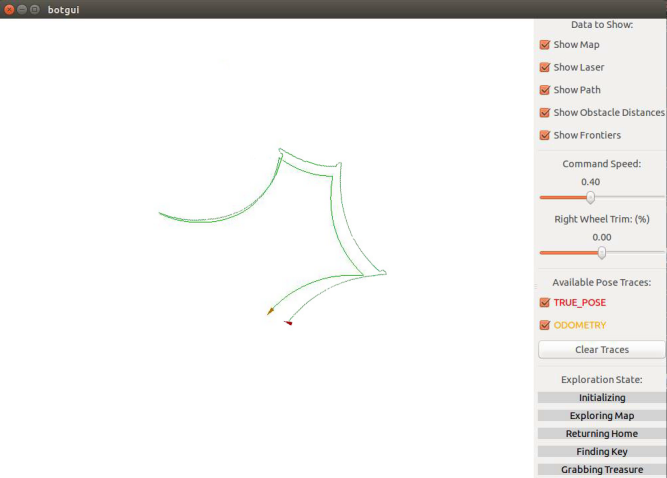

The MaeBot is assumed to follow the Unicycle model. The dead reckoning method does not address errors due to quantization and measurement noise. As a result, these errors get accumulated over time and cause the odometry to drift away from the true pose. Therefore, a suitable localization method, such as SLAM is required to address the drift issue.

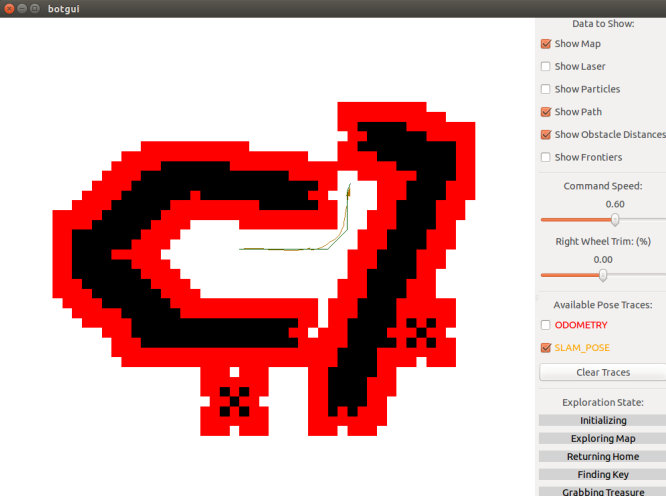

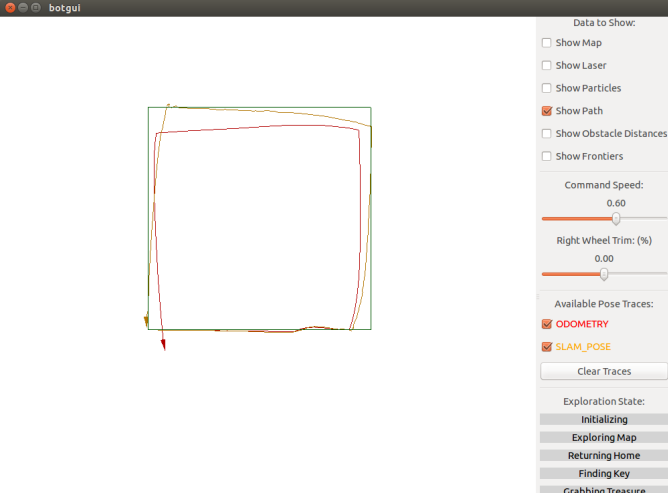

We implement two PID controllers one each for heading and steering. The current position of the robot is determined by fusing information from the odometry and SLAM modules. Though SLAM is accurate, its frequency is not high enough for real-time performance. Thus, we use the encoder odometry to estimate the position of the robot between subsequent SLAM poses. For heading controller, we apply PI controller, because the intrinsic damps of motor is already large and the accumulated error is negligible. For steering controller, we only apply P controller, because the intrinsic damps was large and the I term will induce unexpected overshoot.

SLAM is the task of building a map of an apriori unknown area while at the same time using the map constructed so far to localize the robot. In this project, we will use an occupancy grid for representing the map and a 2D lidar as our sensor. We develop an action model (sampling based odometry motion model), sensor model (weight computation), and particle filter to accurately localize the robot. Finally, We implement the A-star algorithm for planning a path to a target and a map exploration strategy.